Kurzbeitrag : End-user responsibilities on a Generative AI conversation : aus der RDV 4/2025, Seite 186 bis 188

Der Beitrag von Ganesh Srinivasan beleuchtet die Rolle des Endnutzers in generativen KI-Systemen. Im Fokus stehen Verantwortlichkeiten beim Einsatz von GPAI für Hochrisiko-Anwendungen nach der EU-KI-Verordnung – von Datenintegrität und ethischer Nutzung bis hin zu Compliance-Pflichten und möglichen Konsequenzen.

Redaktionelle Vorbemerkung: Der nachfolgende Beitrag stammt von Ganesh Srinivasan, General Manager für Informationssicherheit bei Icertis, einem amerikanischen Anbieter von Vertragsmanagementsoftware. Er wird hier in der englischen Originalfassung veröffentlicht. Der Beitrag behandelt die Verantwortlichkeiten des Endnutzers eines KI-Systems mit allgemeinem Verwendungszweck (engl.: general purpose AI, kurz: GPAI) aus einer technisch-ethischen Perspektive. Im Zentrum der Überlegungen stehen technische und ethische Anforderungen an den Nutzer, der ein GPAI-System für Zwecke verwenden möchte, die nach der KI-VO als hochriskant klassifiziert sind. Ob und unter welchen Voraussetzungen ein solcher Nutzer zudem gem. Art. 25 Abs. 1 lit. c) KI-VO als Anbieter eines Hochrisiko-KI-Systems gilt und daher unter anderem sicherzustellen hat, dass das GPAI-System die besonderen Anforderungen der KI-VO an Hochrisiko-KI-Systeme erfüllt, ist eine komplexe Frage, die bislang nicht abschließend beantwortet ist.[1]

An important actor in the lifecycle of general-purpose AI (GPAI) systems is the end-user, that requires a dedicated and special mention. To give this some context, let us first look at the responsibilities of the various players in this association.[2]

The control over the various layers involved in this orchestration now has the end user as well in perspective.

The semantic nature of a generative AI medium places a lot of significance on the extent of control, wielded by the end-user, at the application layer and most importantly with the PROMPT itself. Compared with a traditional web-application that protects any input field against typical web application attacks, the LLM powered generative AI app is now open to the ‘free-will’ of the end-user who has the control to discuss a wide array of topics, the relevance of which needs to be validated through this entire process flow across the application layer and beyond.

Focus on the end-user provided input

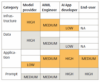

If we are to take ONLY the high-risk use of the GPAI system as defined by the EU AI Act, the following matrix of responsibilities lies on the end-user through the access they have via the prompt or information they make available to the AI system through data uploads or otherwise.

This emphasizes the need for a clear Consent Notice – to the end-user, that calls out the following.

- Purpose of Use: Clearly state the intended purposes of the AI system and the specific tasks it is designed to perform.

- User Responsibilities: Outline the responsibilities of the end-user, including the need to provide accurate information, avoid manipulative inputs, and use the system ethically.

- Data Integrity: Emphasize the importance of submitting truthful and authentic data to ensure the system’s reliability and fairness.

- Ethical Use: Highlight the expectation for users to engage with the system ethically, avoiding misuse or manipulation.

- Potential Consequences: Inform users of the potential consequences of misuse, including impacts on fairness, safety, and societal trust.

- Privacy and Security: Provide information on how the system ensures data privacy and security, and the user’s role in maintaining these standards.

- Reporting Mechanisms: Offer clear instructions on how users can report any anomalies, unethical use, or system malfunctions.

- Acknowledgment and Agreement: Include a section where users acknowledge their understanding of the consent notice and agree to comply with the outlined responsibilities.

Conclusion The focus on end-user input also means additional guard-rails for the application developers and the GPAI system owners that go through a semantic classification on high, medium, low risk – in the context of the purpose intended by a given system. We could possibly segue into that topic in the following edition.

*Ganesh Srinivasan ist General Manager für Informationssicherheit bei Icertis, einem amerikanischen Anbieter für Vertragsmanagement Software.

[1] Dazu Schwartmann/Zenner EuDIR 2025, 3 (8 f.)

[2] Dazu ausführlich Srinivasan EuDIR 2025, 51 (Hinweis der Redaktion)